From chaos to deliverable

Connect your business tools to generate quotes, invoices, and reports with a simple question — no more switching between ten tabs.

Book a callA recognized track record of excellence

Too many hours lost searching?

The right data, at the right time, in the right format.

Instant

Connected to your data in real-time, our copilot provides answers without latency. No more waiting for reports, relevant information is at your fingertips.

Discover data connectionPersonalized

The copilot is trained on your documents and adapts to your business processes. It integrates your document templates, pricing grids, so that every answer is specific to your company.

Explore customizationSimplicity

Centralize access to information. No more juggling between ten applications: ask your question, get a concise and actionable answer.

See a workflow exampleJiliac Copilot

Online

quote_acme_corp.pdf

246 KB

Unify, transform, act

Our AI connects your sources (CRM, Drive, ERP), analyzes context and generates accurate documents, from invoices to reports, without any manual tasks.

Case studies

Real cases delivered in production.

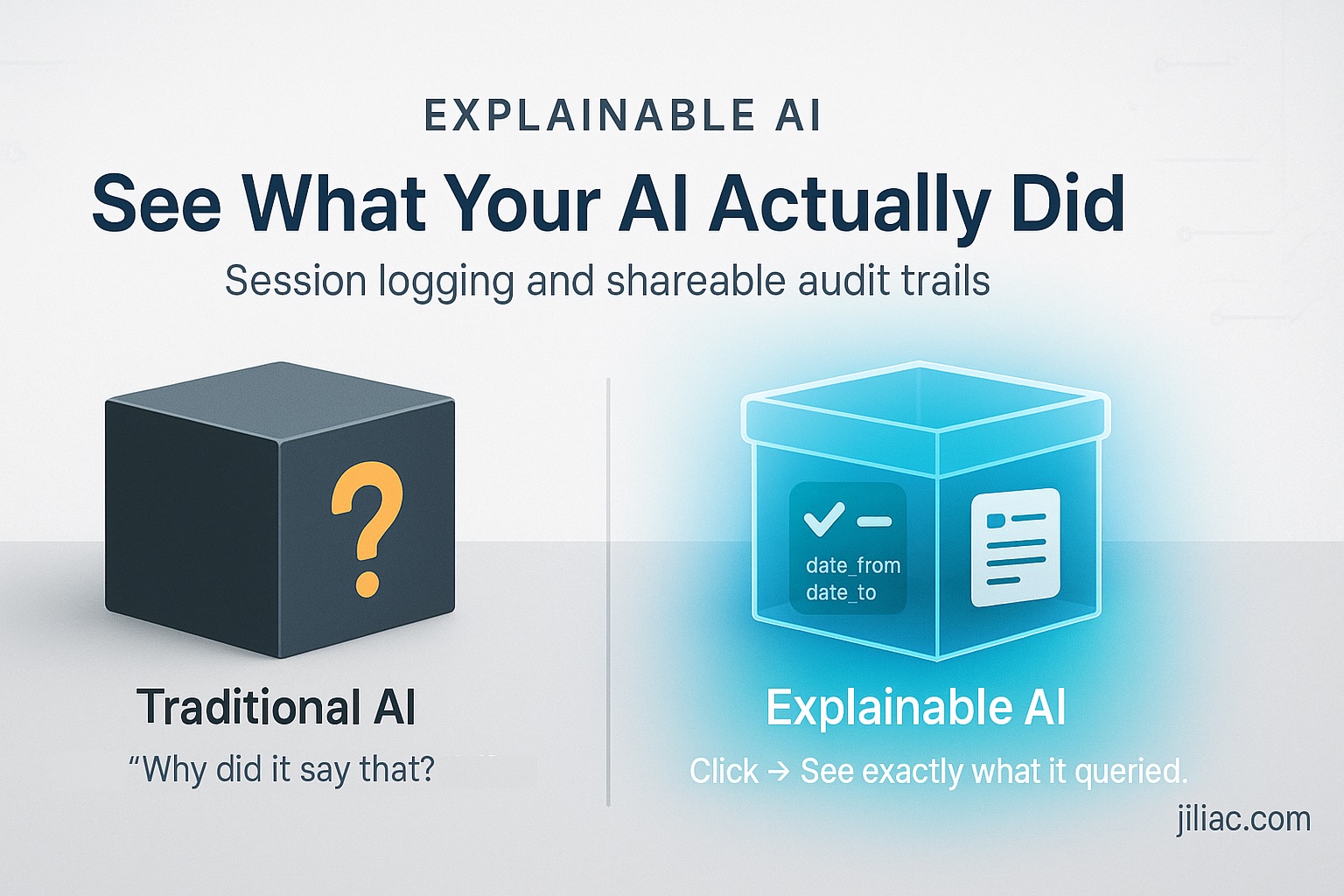

Building Explainable AI: Session Logging and Shareable Results

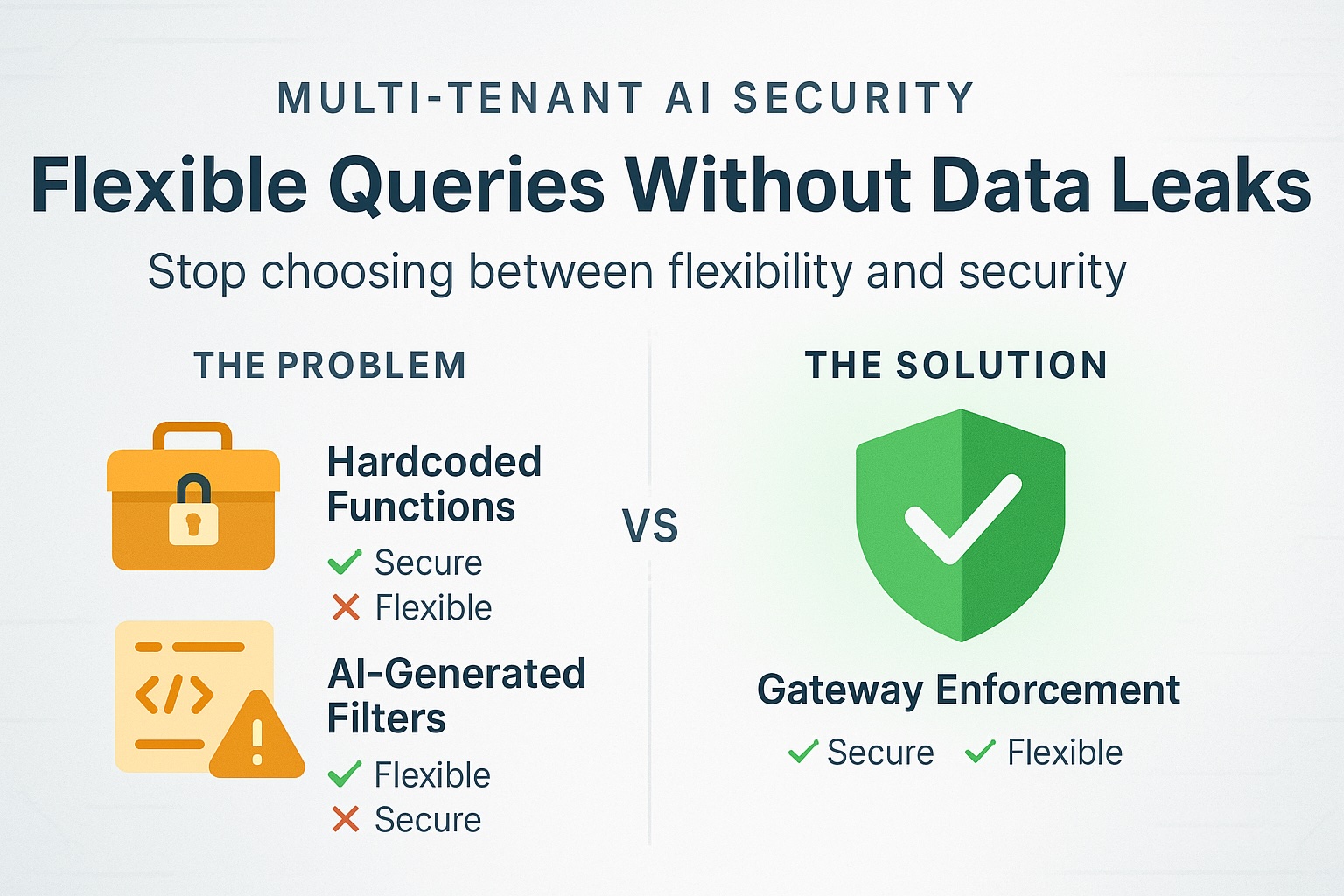

Multi-Tenant AI: Flexible Queries Without Data Leaks